- #Install apache spark on redhat without sudo install#

- #Install apache spark on redhat without sudo archive#

#Install apache spark on redhat without sudo install#

With EEP 6.3.0 and later you can install the HBase Client and tools even if you decide not to install HBase as an ecosystem component.

Beginning with EEP 6.3.0, MapR 6.1 reintroduced HBase as an ecosystem component.

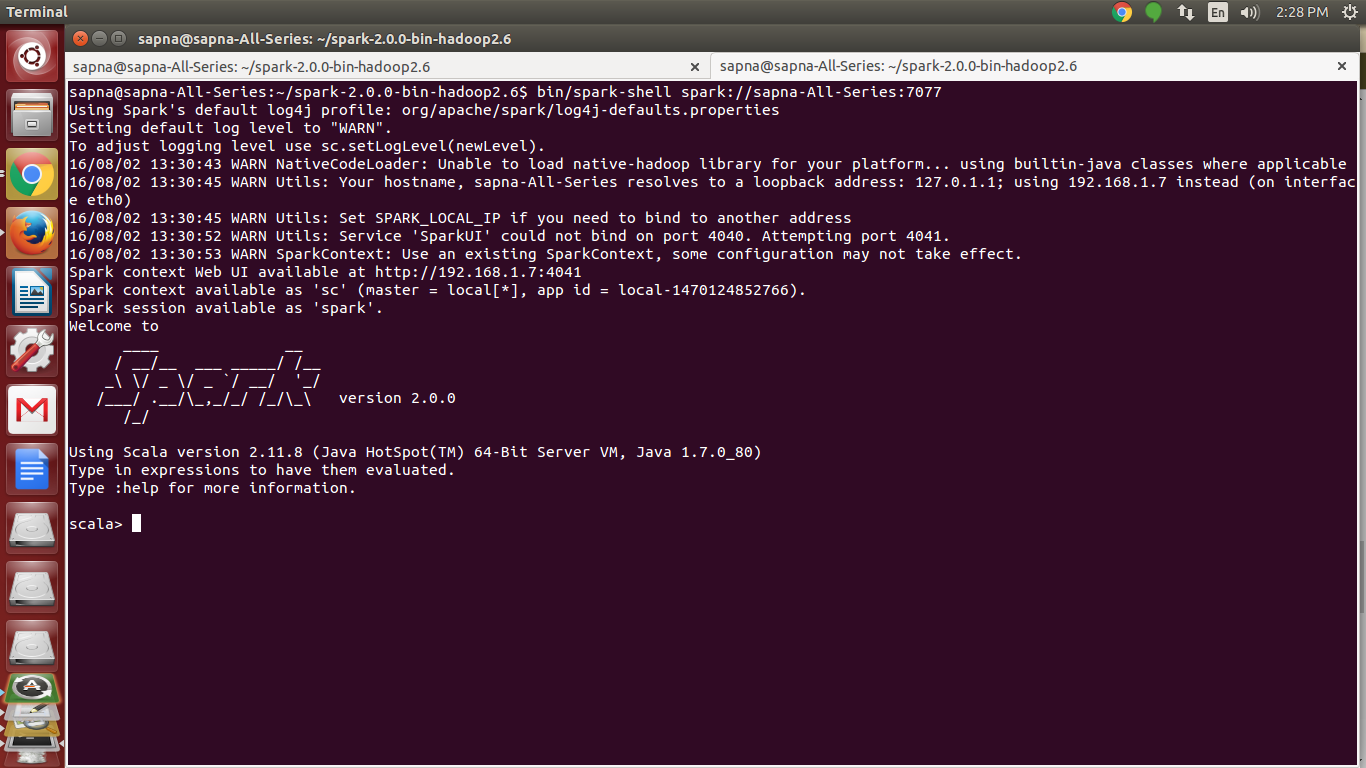

When the profile loads, scroll to the bottom of the file. MapR 6.0.x does not support HBase as an ecosystem component. profile file in the editor of your choice, such as nano or vim.įor example, to use nano, enter: nano. Ask Question Asked 5 years, 2 months ago. I run yum install spark and it installs Spark 1.4.1. You can also add the export paths by editing the. I am in the process of installing spark in my organizations HDP box. profile: echo "export SPARK_HOME=/opt/spark" > ~/.profileĮcho "export PATH=$PATH:$SPARK_HOME/bin:$SPARK_HOME/sbin" > ~/.profileĮcho "export PYSPARK_PYTHON=/usr/bin/python3" > ~/.profile Use the echo command to add these three lines to. There are a few Spark home paths you need to add to the user profile. Documentation: User Guide Mailing List: User and Dev mailing list Continuous Integration: Contributing: Contribution Guide License: Apache 2.0 Zeppelin, a web-based notebook that enables interactive data analytics.You can make beautiful data-driven, interactive and collaborative documents with SQL, Scala and more. Configure Spark Environmentīefore starting a master server, you need to configure environment variables. If you mistype the name, you will get a message similar to: mv: cannot stat 'spark-3.0.1-bin-hadoop2.7': No such file or directory. The terminal returns no response if it successfully moves the directory. One needs to follow the below steps to properly install RStudio server, SparkR, sparklyr, and finally connecting to a spark session within a EMR cluster: 1. Use the mv command to do so: sudo mv spark-3.0.1-bin-hadoop2.7 /opt/spark The output shows the files that are being unpacked from the archive.įinally, move the unpacked directory spark-3.0.1-bin-hadoop2.7 to the opt/spark directory.

#Install apache spark on redhat without sudo archive#

Now, extract the saved archive using tar: tar xvf spark-* Remember to replace the Spark version number in the subsequent commands if you change the download URL. Install WSL in a system or non-system drive on your Windows 10 and then install Hadoop 3.3.0 on it: Install Windows Subsystem for Linux on a Non-System Drive (Mandatory) Install Hadoop 3.3.0 on Linux Now let’s start to install Apache Hive 3.1.2 on WSL. Note: If the URL does not work, please go to the Apache Spark download page to check for the latest version.

0 kommentar(er)

0 kommentar(er)